Paris-based artificial intelligence startup Mistral AI said today it’s open-sourcing a new, lightweight AI model called Mistral Small 3.1, claiming it surpasses the capabilities of similar models created by OpenAI and Google LLC.

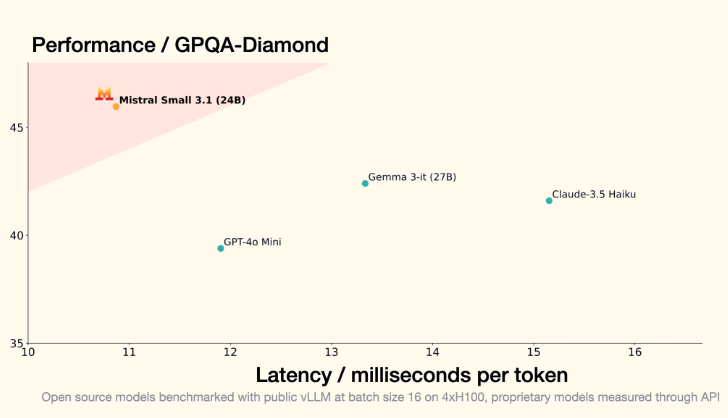

It’s a move that’s likely to escalate the race to develop powerful yet low-cost large language models. Mistral Small 3.1 is able to process text and images with just 24 billion parameters, which means it’s only a fraction of the size of many of the most advanced models around, but still able to compete with them.

In a blog post, Mistral explained that Mistral Small 3.1 offers “improved text performance, multimodal understanding and an expanded content window of up to 128,000 tokens,” compared to its predecessor, Mistral Small 3.

The company added that the new model can process data at speeds of around 150 tokens per second, which makes it well suited for applications that demand rapid responses. By all accounts, it’s an impressive technical achievement that highlights Mistral AI’s alternative strategy. Many of its advances stem from its focus on algorithmic improvements and training optimization techniques, as opposed to its rivals that simply throw increasing amounts of computing resources at newer models. It’s an approach that allows it to maximize the performance of smaller model architectures.

The main advantage of Mistral AI’s approach is it makes AI more accessible. By building powerful new models that can run on relatively modest infrastructure – in this case, a single RTX 4090 graphics processing unit or a Mac laptop with 32 gigabytes of random-access memory – it’s enabling more advanced AI to be deployed on much smaller devices in remote locations.

Mistral’s approach may ultimately prove itself to be more sustainable than simply increasing the scale of AI models, and with the likes of China’s DeepSeek Ltd. pursuing a similar strategy, its much better-funded rivals may ultimately have no choice but to go down the same path.

The French company was founded in 2023 by former AI researchers from Google’s DeepMind unit and Meta Platforms Inc., and it has already established itself as Europe’s top AI company. To date, it has raised more than $1.04 billion in capital at a valuation of about $6 billion. It’s a lot of money, to be sure, but it pales into insignificance compared to the reported $80 billion valuation of OpenAI.

Mistral Small 3.1 is the latest in a string of recent releases by the company. Last month, it debuted a new model called Saba that’s specifically focused on Arabic language and culture, and that was followed by the launch of Mistral OCR this month. Mistral OCR is a specialized model that uses optical character recognition to convert PDF documents into Markdown files, making them more readily accessible to large language models.

Those specialist products bulk out a broader portfolio of AI models that includes the company’s current flagship offering Mistral Large 2, a multimodal model called Pixtral, a code-generating model called Codestral, and a family of highly optimized models for edge devices known as Les Ministraux.

It’s a diverse portfolio that highlights how Mistral AI is looking to tailor its innovations to market demand, creating various purpose-built systems to meet growing needs, rather than trying to match OpenAI and Google head on.

The company’s commitment to open-source is also a distinct strategic choice that stands out in an industry dominated by closed, proprietary models. It has paid off to an extent, too, with “several excellent reasoning models” being built atop of its lightweight predecessor Mistral Small 3. It shows that open collaboration has the potential to accelerate AI development far faster than what any single company can achieve working by itself.

By making its models open-source, Mistral also benefits from expanded research and development capabilities provided by the wider AI community, allowing it to compete with better-funded competitors.

That said, Mistral’s open-source strategy also makes it harder to generate revenue, as it must instead look to provide specialized services, enterprise deployments and unique applications that leverage its foundational technologies and provide some other kind of advantage.

Whether Mistral’s chosen path is the correct one remains to be seen, but in the meantime it’s clear that Mistral Small 3.1 is a significant technical achievement, reinforcing the idea that powerful AI models can be made accessible in much smaller and more efficient packages.

Mistral Small 3.1 is available to download via Huggingface and it can also be accessed via Mistral’s AI application programming interface or on Google Cloud’s Vertex AI platform. In the coming weeks, it will also be made available through Nvidia Corp.’s NIM microservices and Microsoft Corp.’s Azure AI Foundry.

Featured image: SiliconANGLE/Dreamina

Your vote of support is important to us and it helps us keep the content FREE.

One click below supports our mission to provide free, deep, and relevant content.

Join our community on YouTube

Join the community that includes more than 15,000 #CubeAlumni experts, including Amazon.com CEO Andy Jassy, Dell Technologies founder and CEO Michael Dell, Intel CEO Pat Gelsinger, and many more luminaries and experts.

THANK YOU